Frank Guenther has been working for more than two decades on a neural model of speech that he’s using to create software he hopes will translate thoughts to words.

Photo by Kalman Zabarsky

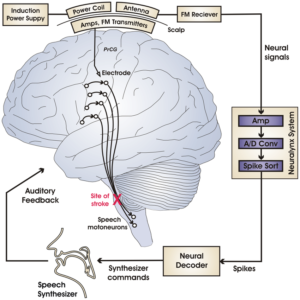

A group of researcher at Boston University (U.S.), lead by Frank H. Guenther, was able to build a system turns brain waves into FM radio signals and decodes them as sound as the first totally wireless brain-computer interface (BCI) capable of doing so. [1]

“It decodes continuous auditory parameters for a real-time speech synthesizer from neuronal activity in motor cortex during attempted speech.” the research point out. [1]

The research has been published in December the 9th, 2009, on PLOS and NCBI, as the result of an applied medical experiment conducted on Erik Ramsey, 26 years old patient, left almost entirely paralyzed by a horrific car accident 10 years ago, that had caused a brain-stem stroke that cut the connection between his mind and his body. [1][2][3][5]

The implant system tested by Ramsey was originally designed by Phil Kennedy, a pioneer in brain-computer interface research, who had National Institutes of Health backing for a start-up company called Neural Signals, based in nearby Duluth, Georgia.

Phil Kennedy

The BU scientists, Frank Guenther, professor of cognitive and neural systems, and Jonathan Brumberg, a postdoctoral research associate in Guenther’s lab, are working to help Ramsey and others who have lost the ability to speak. Guenther has been developing a neural model of speech for more than two decades. [4]

The system was designed to receive Ramsey’s brain signals wirelessly from the implanted electrode as he imagined speaking and to decode that neural activity into real-time speech via a voice synthesizer. [1]

From left, Dr. Philip R. Kennedy, Erik Ramsey (in chair), Eddie Ramsey, Jess Bartels, Jon Brumberg, and Frank Guenther.

(Globe staff Photo / David Kamerman)

Ramsey can only express vowel sounds with the system. That’s less than can be accomplished with wired brain-computer interfaces. But it’s still a promising step.

Using a neurological model constructed by Guenther, Ramsey’s brain activity is mapped to corresponding mouth and jaw movements. Another program decodes the signals, and synthesizes them in the sound of a tinny, but human-like voice.

For now, the computer that translates Ramsey’s mental broadcasts is still in a laboratory. “But our goal is to have him transmit directly to a laptop,” said Guenther. [3]

More

“From Brain to Computer to Sound”:

Dr. Philip R. Kennedy “the future of brain/neuro computer interaction”:

References

[1] http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0008218

[2] https://www.ncbi.nlm.nih.gov/pubmed/20011034

[3] http://www.bu.edu/bostonia/spring09/silence/

[4] https://www.wired.com/2009/12/wireless-brain/

[5] https://en.wikipedia.org/wiki/List_of_people_with_locked-in_syndrome#Erik_Ramsey